Introduction to Scientific Computation#

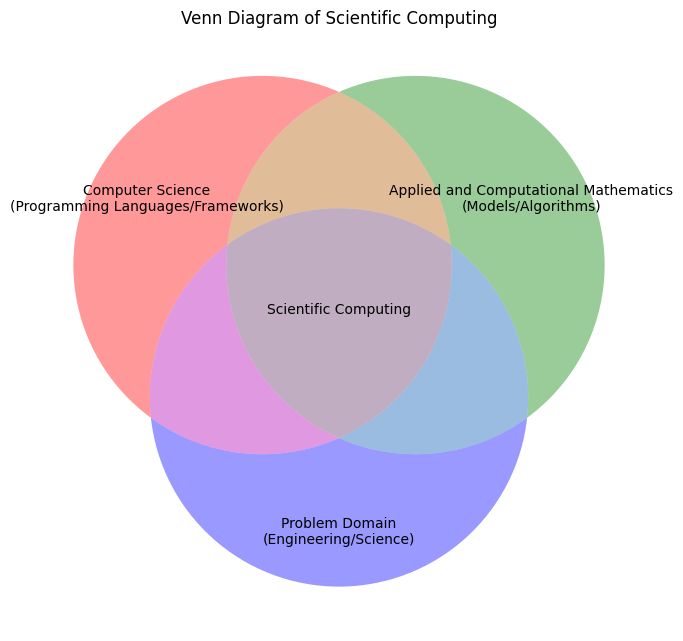

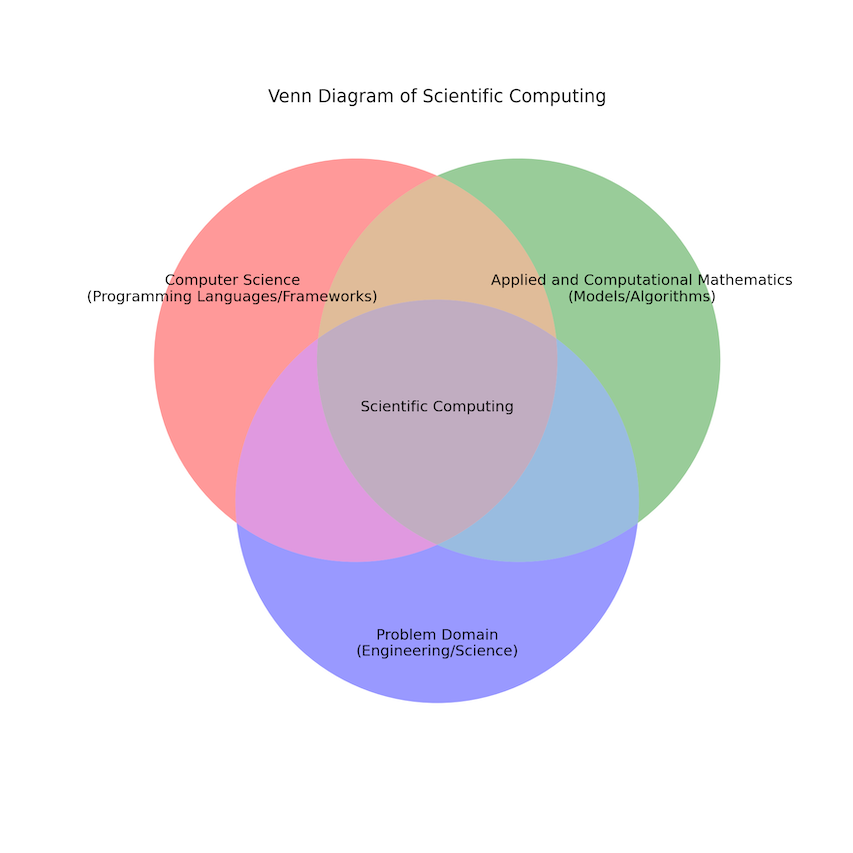

Scientific Computation (Scientific Computing, Computational science)

“Scientific Computing is the collection of tools, techniques, and theories required to solve on a computer mathematical models of problems in Science and Engineering” [Golub and Ortega, 2014].

As such Scientific Computing covers a wide range of topics and fields and if you ask 10 different domain experts to define the term Scientifc Computing, you will probably get 15 different answers.

This is in a way also reflected in the editorial comments for the Wiki article on Computational Science.

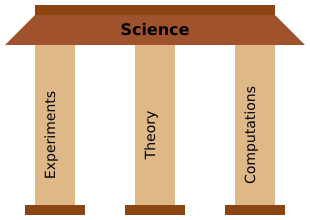

Nevertheless, Scientific Computing is considered the third pillar of science, the others being Experiments and Theory.

There a lot science disciplines employing the scientific computing for scientific discoveries, e.g.

Global ocean/climate modeling

Computational fluid dynamics

Seismology

Biophysics

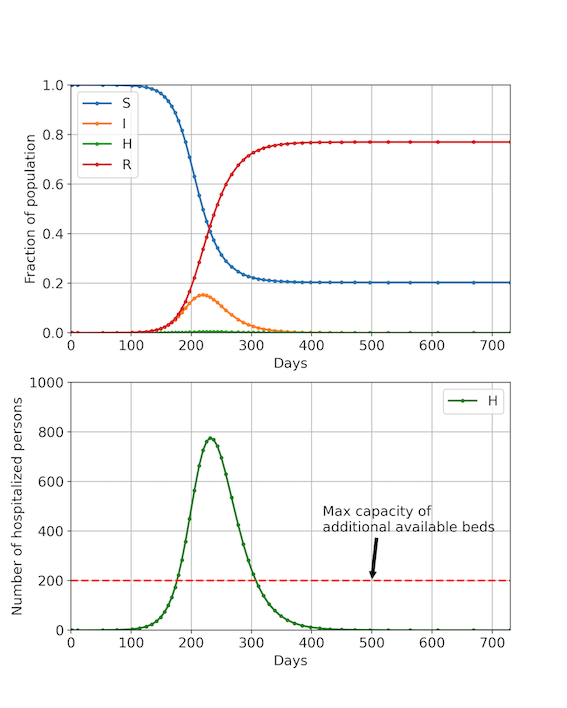

Population dynamics (e.g.disease spreading)

Economics

Medical imaging

Material science

Challenge: Find me a scientific discipline where no computational methods are employed!

The covid 19 pandemicwas a very good example of a typical science problem involving Scientific Computing, which typically consist of the following steps.

Mathematical Modeling

Analysis of the mathematical model (Existence, Uniqueness, Continuity)

Numerical methods (computational complexity, stability, accuracy)

Realization (implemententation)

Postprocessing

Validation

This semester, we will learn about methods which helps you to e.g.

model and predict the spreading of diseases like Covid 19

simulate the generation of patterns in biology

understand how images are compressed

from IPython.display import YouTubeVideo, HTML

YouTubeVideo('nw2bPnhtxN8', width=800, height=500)

In general, we can think of Scientific as an interdisplinary, computational based approach towards scientific discovery:

Mentimeter time:

Please go to and enter the following code 7381 6459

Machine Representation of Numbers#

Today we will talk about one important and unavoidable source of errors, namely the way, a computer deals with numbers.

Let’s start with two simple tests.

Define two numbers \(a=0.2\) and \(b=0.2\) and test whether their sum is equal to \(0.4\).

Now define two numbers \(a=0.2\) and \(b=0.1\) and test whether their sum is equal to \(0.3\).

# Write your code here

a = 0.2

b = 0.1

sum = 0.3

if (a+b) == sum:

print("That is what I expected!!")

else:

print("What the hell is going on??")

diff = a+b

diff = diff - sum

print(f"{diff}")

What the hell is going on??

5.551115123125783e-17

Why is that? The reason is the way numbers are represent on a computer, which will be the topic of the first part of the lecture.

After the lecture I recommed you to take a look which discusses the phenomena we just observed in some detail.

Positional System#

On everyday base, we represent numbers using the positional system. For instance, when we write \(1234.987\) to denote the number

using \(10\) as base. This is also known a decimal system.

In general for any \(\beta \in \mathbb{N}\), \(\beta \geqslant 2\), we use the positional representation

with \(a_n \neq 0\) to represent the number

Here,

\(\beta\) is called the base

\(a_k \in [0, \beta-1]\) are called the digits

\(s \in \{0,1\}\) defines the sign

\(a_n a_{n-1}\ldots a_0\) is the integer part

\(a_{-1}a_{-2}\ldots a_{-m}\) is called the fractional part

The point between \(a_0\) and \(a_{-1}\) is generally called the radix point

Exercise 1

Write down the position representation of the number \(3\frac{2}{3}\) for both the base \(\beta=10\) and \(\beta=3\).

Solution to Exercise 1

\(\beta = 10: [3.666666666\cdots]_{10}\)

\(\beta = 3: 1 \cdot 3^{1} + 0 \cdot 3^{0} + 2 \cdot 3^{-1} = [10.2]_{3}\)

To represent numbers on a computer, the most common bases are

\(\beta = 2\) (binary),

\(\beta=10\) (decimal)

\(\beta = 16\) (hexidecimal).

For the latter one, one uses \(1,2,\ldots, 9\), A,B,C,D,E,F to represent the digits. For \(\beta = 2, 10, 16\) is also called the binary point, decimal point and hexadecimal point, respectively.

We have already seen that for many (actuall most!) numbers, the fractional part can be infinitely long in order to represent the number exactly. But on a computer, only a finite amount of storage is available, so to represent numbers, only a fixed numbers of digits can be kept in storage for each number we wish to represent.

This will of course automatically introduces errors whenever our number can not represented exactly by the finite number of digits available.

Fix-point system (fasttall system)#

Use \(N=n+1+m\) digits/memory locations to store the number \(x\) written as above. Since the binary/decimal point is fixed , it is difficult to represent large numbers \(\geqslant \beta^{n+1}\) or small numbers \( < \beta^{-m}\).

E.g. nowdays we often use 16 (decimal) digits in a computer, if you distributed that evenly to present same number of digits before and after the decimal point, the range or representable numbers is between \(10^8\) and \(10^{-8}\) This is very inconvenient!

Also, small numbers which are located towards the lower end of this range cannot be as accuractely represented as number close to the upper end of this range.

As a remedy, an modified representation system for numbers was introduced, known as normalized floating point system.

Normalized floating point system (flyttall system)#

Returning to our first example:

In general we write

Here,

\(t \in \mathbb{N}\) is the number of significant digits (gjeldene siffre)

\(e\) is an integer called the exponent (eksponent)

\(m = a_1 a_2 \ldots a_t \in \mathbb{N}\) is known as the mantissa. (mantisse)

Exponent \(e\) defines the scale of the represented number, typically, \(e \in \{e_{\mathrm{min}}, \ldots, e_{\mathrm{max}}\}\), with \(e_{\mathrm{min}} < 0\) and \(e_{\mathrm{max}} > 0\).

Number of significant digits \(t\) defines the relative accuracy (relativ nøyaktighet).

We define the finite set of available floating point numbers

Typically to enforce a unique representation and to ensure maximal relative accuracy, one requires that \(a_1 \neq 0\) for non-zero numbers.

Exercise 2

What is the smallest (non-zero!) and the largest number you can represent with \(\mathbb{F}\)?

Solution to Exercise 2

Conclusion:

Every number \(x\) satifying \(\beta^{e_{\mathrm{min}}-1} \leqslant |x| \leqslant \beta^{e_{\mathrm{max}}}(1-\beta^{-t})\) but which is not in \(\mathbb{F}\) can be represented by a floating point number \(\mathrm{fl}(x)\) by rounding off to the closest number in \(\mathbb{F}\).

Relative machine precision is

\[ \dfrac{|x-\mathrm{fl}(x)|}{|x|} \leqslant \epsilon := \frac{\beta^{1-t}}{2} \]\(|x| < \beta^{e_{\mathrm{min}}-1}\) leads to underflow.

\(|x| > \beta^{e_{\mathrm{max}}}(1-\beta^{-t})\) leads to overflow.

Standard machine presentations nowadays using

Single precision, allowing for 7-8 sigificant digits

Double precision, allowing for 16 sigificant digits

Things we don’t discuss in this but which are important in numerical mathematics#

We see that already by entering data from our model into the computer, we make an unavoidable error. The same also applied for the realization of basics mathematical operations \(\{+, -, \cdot, /\}\) etc. on a computer.

Thus it is of importance to understand how errors made in a numerical method are propagated through the numerical algorithms. Keywords for the interested are

Forward propagation: How does an initial error and the algorithm affect the final solution?

Backward propagation: If I have certain error in my final solution, how large was the initial error?